Containerlab Service Deploy Netbox Task¤

task api name:

deploy_netbox

Containerlab service deploy_netbox task is designed to deploy network topologies using devices data retrieved from Netbox. This task automates the deployment process by fetching nodes and links data from Netbox and constructing the topology file, organizing it into a specific folder structure, and executing the containerlab deploy command with the appropriate arguments.

Containerlab Deploy Netbox Task Overview¤

The deploy_netbox task provides the following features:

-

Automated Topology Deployment: Deploys a topology sourcing nodes and links data from Netbox using either or all of these:

- Netbox Devices List - use provided device names to construct topology and deploy lab

- Netbox Tenant - source devices for given tenant and deploy the lab

- Netbox Device Filters - fetch devices data from Netbox GraphQL API and deploy the lab

-

Topology Links Sourcing - links formed using Netbox devices' connections and circuits data.

- Reconfiguration: Supports reconfiguring an already deployed lab.

- Node Filtering: Allows deploying specific nodes using a filter.

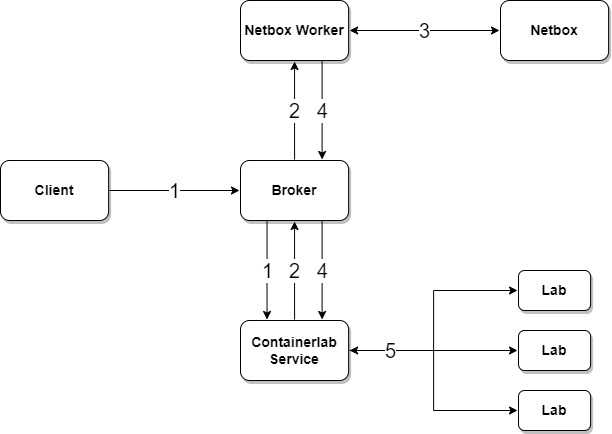

How It Works¤

deploy_netbox task uses Netbox service get_containerlab_inventory task to fetch topology inventory data from Netbox.

-

Client submits request to Containerlab service to deploy a lab

-

Containerlab worker sends job request to Netbox service to retrieve topology data for requested devices

-

Netbox service fetches data from the Netbox and constructs Containerlab inventory

-

Netbox returns lab inventor data back to Containerlab worker

-

Containerlab worker deploys lab using topology data provided by Netbox service

Device Config Context Containerlab Parameters¤

Containerlab node details can be defined under device configuration context norfab.containerlab path, for example:

{

"norfab": {

"containerlab": {

"kind": "ceos",

"image": "ceos:latest",

"mgmt-ipv4": "172.100.100.10/24",

"ports": [

{10000: 22},

{10001: 830}

],

... any other containerlab node parameters ...

"interfaces_rename": [

{

"find": "Ethernet",

"replace": "eth",

"use_regex": false

}

]

}

}

}

interfaces_rename- is a list of one or more interface renaming instructions, each item must havefindandreplacedefined, optionaluse_regexflag specifies whether to use regex based pattern substitution.kind- uses Netbox deviceplatformfield value by defaultimage- usesimagevalue if provided, otherwise uses{kind}:latest

Containerlab Deploy Netbox Task Sample Usage¤

Below is an example of how to use the Containerlab deploy Netbox task to deploy a topology.

Examples

nf#containerlab deploy-netbox lab-name foobar devices fceos4 fceos5

--------------------------------------------- Job Events -----------------------------------------------

31-May-2025 13:02:29.525 9e3b29210e1140f8b3a311e8c4669ca4 job started

31-May-2025 13:02:29.533 INFO containerlab-worker-1 running containerlab.deploy_netbox - Checking existing containers

31-May-2025 13:02:29.573 INFO containerlab-worker-1 running containerlab.deploy_netbox - Existing containers found, retrieving details

31-May-2025 13:02:29.574 INFO containerlab-worker-1 running containerlab.deploy_netbox - 172.100.100.0/24 already in use, allocating new subnet

31-May-2025 13:02:29.575 INFO containerlab-worker-1 running containerlab.deploy_netbox - Collected TCP/UDP ports used by existing containers

31-May-2025 13:02:29.576 INFO containerlab-worker-1 running containerlab.deploy_netbox - foobar allocated new subnet 172.100.102.0/24

31-May-2025 13:02:29.576 INFO containerlab-worker-1 running containerlab.deploy_netbox - foobar fetching lab topology data from Netbox

31-May-2025 13:02:31.090 INFO containerlab-worker-1 running containerlab.deploy_netbox - foobar topology data retrieved from Netbox

31-May-2025 13:02:31.094 INFO containerlab-worker-1 running containerlab.deploy_netbox - foobar topology data saved to

'/home/norfab/norfab/tests/nf_containerlab/__norfab__/files/worker/containerlab-worker-1/topologies/foobar/foobar.yaml'

31-May-2025 13:02:31.095 INFO containerlab-worker-1 running containerlab.deploy_netbox - foobar deploying lab using foobar.yaml topology file

31-May-2025 13:02:31.123 INFO containerlab-worker-1 running containerlab.deploy_netbox - 13:02:31 INFO Containerlab started version=0.67.0

31-May-2025 13:02:31.134 INFO containerlab-worker-1 running containerlab.deploy_netbox - 13:02:31 INFO Parsing & checking topology file=foobar.yaml

31-May-2025 13:02:31.145 INFO containerlab-worker-1 running containerlab.deploy_netbox - 13:02:31 INFO Creating docker network name=br-foobar IPv4 subnet=172.100.102.0/24 IPv6 subnet="" MTU=0

31-May-2025 13:02:31.257 INFO containerlab-worker-1 running containerlab.deploy_netbox - 13:02:31 INFO Creating lab directory

path=/home/norfab/norfab/tests/nf_containerlab/__norfab__/files/worker/containerlab-worker-1/topologies/foobar/clab-foobar

31-May-2025 13:02:31.268 INFO containerlab-worker-1 running containerlab.deploy_netbox - 13:02:31 INFO config file

'/home/norfab/norfab/tests/nf_containerlab/__norfab__/files/worker/containerlab-worker-1/topologies/foobar/clab-foobar/fceos4/flash/startup-config' for node 'fceos4' already exists and will not be generated/reset

31-May-2025 13:02:31.280 INFO containerlab-worker-1 running containerlab.deploy_netbox - 13:02:31 INFO Creating container name=fceos4

31-May-2025 13:02:31.291 INFO containerlab-worker-1 running containerlab.deploy_netbox - 13:02:31 INFO config file

'/home/norfab/norfab/tests/nf_containerlab/__norfab__/files/worker/containerlab-worker-1/topologies/foobar/clab-foobar/fceos5/flash/startup-config' for node 'fceos5' already exists and will not be generated/reset

31-May-2025 13:02:31.302 INFO containerlab-worker-1 running containerlab.deploy_netbox - 13:02:31 INFO Creating container name=fceos5

31-May-2025 13:02:32.065 INFO containerlab-worker-1 running containerlab.deploy_netbox - 13:02:32 INFO Created link: fceos5:eth1 ▪┄┄▪ fceos4:eth1

31-May-2025 13:02:32.220 INFO containerlab-worker-1 running containerlab.deploy_netbox - 13:02:32 INFO Created link: fceos5:eth2 ▪┄┄▪ fceos4:eth2

31-May-2025 13:02:32.592 INFO containerlab-worker-1 running containerlab.deploy_netbox - 13:02:32 INFO Created link: fceos5:eth3 ▪┄┄▪ fceos4:eth3

31-May-2025 13:02:32.744 INFO containerlab-worker-1 running containerlab.deploy_netbox - 13:02:32 INFO Created link: fceos5:eth4 ▪┄┄▪ fceos4:eth4

31-May-2025 13:02:32.844 INFO containerlab-worker-1 running containerlab.deploy_netbox - 13:02:32 INFO Created link: fceos5:eth6 ▪┄┄▪ fceos4:eth6

31-May-2025 13:02:32.953 INFO containerlab-worker-1 running containerlab.deploy_netbox - 13:02:32 INFO Created link: fceos5:eth7 ▪┄┄▪ fceos4:eth7

31-May-2025 13:02:32.964 INFO containerlab-worker-1 running containerlab.deploy_netbox - 13:02:32 INFO Running postdeploy actions for Arista cEOS 'fceos5' node

31-May-2025 13:02:33.005 INFO containerlab-worker-1 running containerlab.deploy_netbox - 13:02:33 INFO Created link: fceos5:eth8 ▪┄┄▪ fceos4:eth101

31-May-2025 13:02:33.053 INFO containerlab-worker-1 running containerlab.deploy_netbox - 13:02:33 INFO Created link: fceos5:eth11 ▪┄┄▪ fceos4:eth11

31-May-2025 13:02:33.064 INFO containerlab-worker-1 running containerlab.deploy_netbox - 13:02:33 INFO Running postdeploy actions for Arista cEOS 'fceos4' node

31-May-2025 13:02:54.730 INFO containerlab-worker-1 running containerlab.deploy_netbox - 13:02:54 INFO Adding host entries path=/etc/hosts

31-May-2025 13:02:54.742 INFO containerlab-worker-1 running containerlab.deploy_netbox - 13:02:54 INFO Adding SSH config for nodes path=/etc/ssh/ssh_config.d/clab-foobar.conf

31-May-2025 13:02:54.859 9e3b29210e1140f8b3a311e8c4669ca4 job completed in 25.334 seconds

--------------------------------------------- Job Results --------------------------------------------

containerlab-worker-1:

----------

containers:

|_

----------

lab_name:

foobar

labPath:

foobar.yaml

name:

clab-foobar-fceos4

container_id:

c24aa0089eca

image:

ceosimage:4.30.0F

kind:

ceos

state:

running

ipv4_address:

172.100.102.2/24

N/A

owner:

norfab

|_

lab_name:

foobar

labPath:

foobar.yaml

name:

clab-foobar-fceos5

container_id:

2098290d7a79

image:

ceosimage:4.30.0F

kind:

ceos

state:

running

ipv4_address:

172.100.102.3/24

ipv6_address:

N/A

owner:

norfab

nf#show containerlab labs

containerlab-worker-1:

- foobar

nf#

In this example:

nfclicommand starts the NorFab Interactive Shellcontainerlabcommand switches to the Containerlab sub-shelldeploy_netboxcommand instruct Containerlab service to deploy a topologydevicesspecifies list of devices to fetch data and links for from netbox

This code is complete and can run as is.

import pprint

from norfab.core.nfapi import NorFab

if __name__ == '__main__':

nf = NorFab(inventory="inventory.yaml")

nf.start()

client = nf.make_client()

res = client.run_job(

service="containerlab",

task="deploy_netbox",

kwargs={

"devices": ["fceos4", "fceos5"],

"lab_name": "foobar"

}

)

pprint.pprint(res)

nf.destroy()

Reconfiguring an Existing Lab¤

The deploy_netbox task supports reconfiguring an already deployed lab by using the reconfigure argument. This allows you to update the lab configuration without destroying and redeploying it.

Filtering Nodes for Deployment¤

The deploy_netbox task allows you to deploy specific nodes in a topology using the node_filter argument. This is useful for testing or updating specific parts of a lab without affecting the entire topology.

NORFAB Containerlab CLI Shell Reference¤

Below are the commands supported by the deploy_netbox task:

nf#man tree containerlab.deploy-netbox

└── containerlab: Containerlab service

└── deploy-netbox: Spins up a lab using devices data from Netbox

├── timeout: Job timeout

├── workers: Filter worker to target, default 'any'

├── verbose-result: Control output details, default 'False'

├── lab-name: Lab name to generate lab inventory for

├── tenant: Tenant name to generate lab inventory for

├── filters: Netbox device filters to generate lab inventory for

│ ├── tenant: Filter devices by tenants

│ ├── device-name-contains: Filter devices by name pattern

│ ├── model: Filter devices by models

│ ├── platform: Filter devices by platforms

│ ├── region: Filter devices by regions

│ ├── role: Filter devices by roles

│ ├── site: Filter devices by sites

│ ├── status: Filter devices by statuses

│ └── tag: Filter devices by tags

├── devices: List of devices to generate lab inventory for

├── progress: Display progress events, default 'True'

├── netbox-instance: Name of Netbox instance to pull inventory from

├── ipv4-subnet: IPv4 management subnet to use for lab, default '172.100.100.0/24'

├── image: Docker image to use for all nodes

├── ports: Range of TCP/UDP ports to use for nodes, default '[12000, 13000]'

├── reconfigure: Destroy the lab and then re-deploy it., default 'False'

└── dry-run: Do not deploy, only fetch inventory from Netbox

nf#

* - mandatory/required command argument

Python API Reference¤

Deploys a containerlab topology using device data from the Netbox database.

This method orchestrates the deployment of a containerlab topology by:

- Inspecting existing containers to determine subnets and ports in use.

- Allocating a management IPv4 subnet for the new lab, avoiding conflicts.

- Downloading inventory data from Netbox for the specified lab and filters.

- Saving the generated topology file to a dedicated folder.

- Executing the

containerlab deploycommand with appropriate arguments.

To retrieve topology data from Netbox at least one of these arguments must be provided to identify a set of devices to include into Containerlab topology:

tenant- deploys lab using all devices and links that belong to this tenantdevices- lab deployed only using devices in the listsfilters- list of device filters to retrieve from Netbox

If multiple of above arguments provided, resulting lab topology is a sum of all devices matched.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

lab_name

|

str

|

The name to use for the lab to deploy. |

None

|

tenant

|

str

|

Deploy lab for given tenant, lab name if not set becomes equal to tenant name. |

None

|

filters

|

list

|

List of filters to apply when fetching devices from Netbox. |

None

|

devices

|

list

|

List of specific devices to include in the topology. |

None

|

instance

|

str

|

Netbox instance identifier. |

None

|

image

|

str

|

Container image to use for devices. |

None

|

ipv4_subnet

|

str

|

Management IPv4 subnet for the lab. |

'172.100.100.0/24'

|

ports

|

tuple

|

Tuple specifying the range of ports to allocate. |

(12000, 15000)

|

progress

|

bool

|

If True, emits progress events. |

False

|

reconfigure

|

bool

|

If True, reconfigures an already deployed lab. |

False

|

timeout

|

int

|

Timeout in seconds for the deployment process. |

600

|

node_filter

|

str

|

Comma-separated string of nodes to deploy. |

None

|

dry_run

|

bool

|

If True, only generates and returns the topology inventory without deploying. |

False

|

Returns:

| Name | Type | Description |

|---|---|---|

Result |

Result

|

deployment results with a list of nodes deployed |

Raises:

| Type | Description |

|---|---|

Exception

|

If the topology file cannot be fetched. |

Source code in norfab\workers\containerlab_worker.py

704 705 706 707 708 709 710 711 712 713 714 715 716 717 718 719 720 721 722 723 724 725 726 727 728 729 730 731 732 733 734 735 736 737 738 739 740 741 742 743 744 745 746 747 748 749 750 751 752 753 754 755 756 757 758 759 760 761 762 763 764 765 766 767 768 769 770 771 772 773 774 775 776 777 778 779 780 781 782 783 784 785 786 787 788 789 790 791 792 793 794 795 796 797 798 799 800 801 802 803 804 805 806 807 808 809 810 811 812 813 814 815 816 817 818 819 820 821 822 823 824 825 826 827 828 829 830 831 832 833 834 835 836 837 838 839 840 841 842 843 844 845 846 847 848 849 850 851 852 853 854 855 856 857 858 859 860 861 862 863 864 865 866 867 868 869 870 871 872 873 874 875 876 877 878 879 880 881 882 883 884 885 886 887 888 889 890 891 892 893 894 895 896 | |